One of the core capabilities of Databricks is to work with Structured, Unstructured & Semi-Structured Datasets. Recently there was an ask as how to work with Image Datasets and access it via Notebooks, Databricks SQL and Power BI in the light of Unity Catalog.

Here by in this blog post, I will walk you through as what is needed interns of access in Catalog, Schema, External Location and then save it as External Table, Access it via Notebooks using Pyspark and Databricks SQL and finally in Power BI.

Access In Unity Catalog

Unity Catalog is a fine-grained governance solution for Data & AI in Lakehouse. Please follow Databricks Unity Catalog documentation to more about this topic (AWS / Azure).

| Catalog | CREATE USAGE |

| SCHEMA / Database | CREATE USAGE |

| EXTERNAL LOCATION | CREATE TABLE READ FILES WRITE FILES |

Setup

Image Files– I have sample image files in s3 bucket and this bucket is added as External Location (AWS / Azure) in Unity Catalog. Bucket name is like s3:/<Bucket Name>/images

Databricks Cluster – Currently when Unity Catalog is enabled for a workspace, while provisioning a cluster we have to specify Cluster Access Mode and currently only 2 choice we have which are:

- Single User – support workload using all programming languages and support Unity Catalogs

- Shared – Support Python & SQL programming languages and support Unity Catalogs but has some limitations (AWS / Azure)

I have used SINGLE USER as it does support Python UDF’s which are currently not supported by Shared Cluster Access Mode.

How to Read Image Files

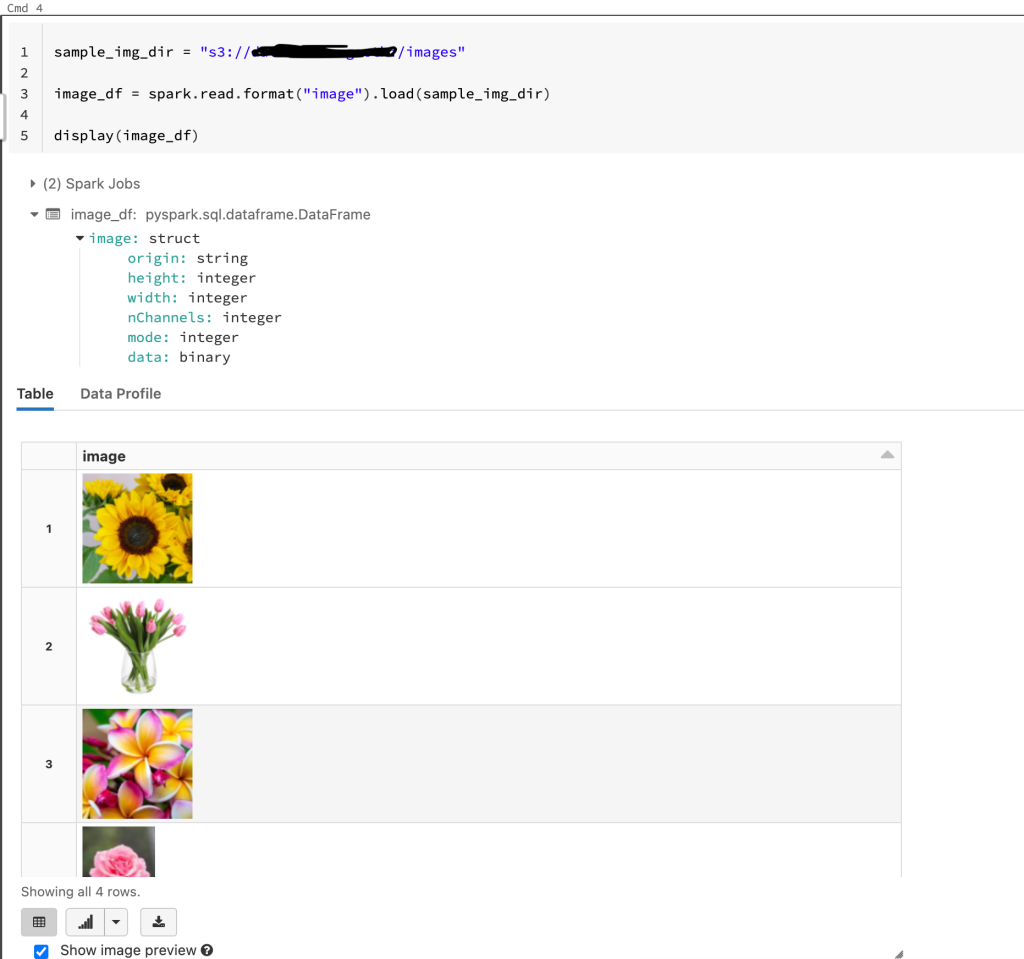

In order to read Image Files in Databricks we can either use format of type as “image” which normally work for JPEG, PNG and other known formats. However there are few limitations with image format which can be avoided by using “binary file” format.

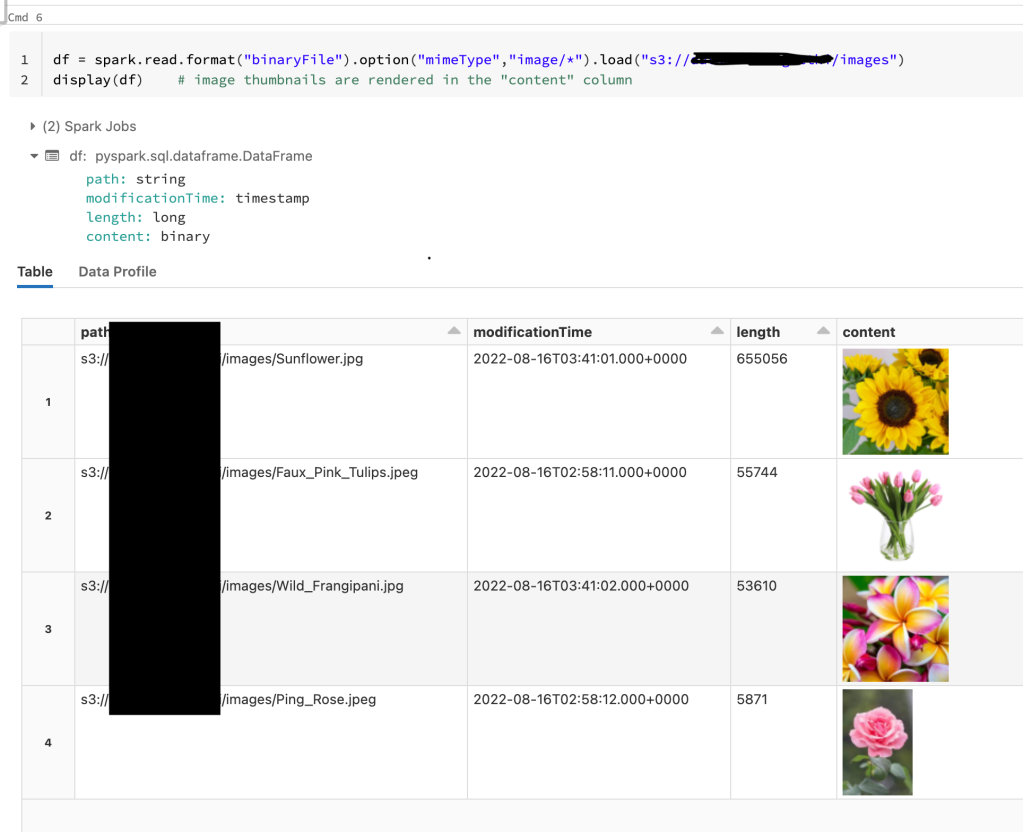

Binary File format, reads file as binary and converts each file into a single record that contains the raw contents and metadata of the file. If a file has file name with extension in its name, then its image preview is automatically enabled. Alternatively, we can force image preview functionality by using “mimeType” option with a string value “image/*” to annotate the binary column. Images are decoded based on their format information in the binary content. Unsupported files appear as a broken image icon.

In below screenshot, we are using format = image and as all files are of extension JPEG hence it provides an image preview.

In below screenshot, when we use format = binaryFile and option = mimeType, “image/*”, it not only provide a preview of the image but also some other properties as well.

How to save data pertaining to images and image file as a DELTA Table

Above we just displayed image datasets but haven’t saved it yet. Let’s see how to save data and image as DELTA table.

In below screenshot, we created a dummy dataset with 3 columns i.e.. Id, Flower_Name & Location.

Please note: We just specified the location of s3 bucket where files are residing and a display of dataframe does not render it as image as yet.

Next, we need to read the image files from s3 bucket and create a seperate dataframe with image related properties.

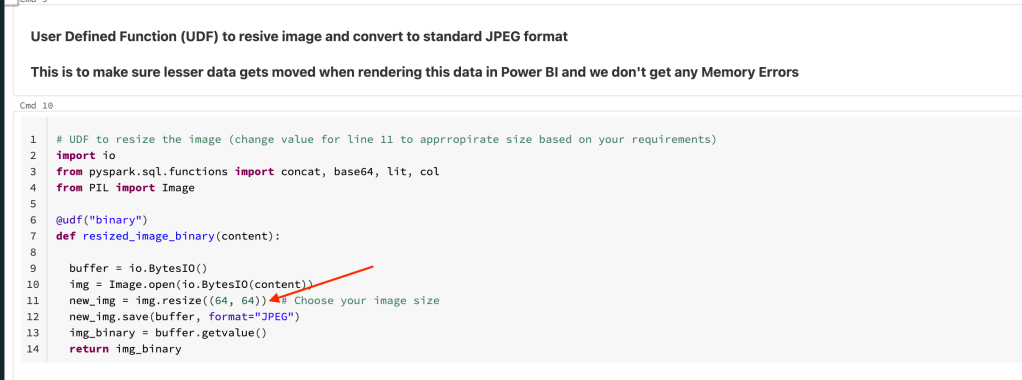

Python UDF – We will be using a Python User Defined Function (UDF) in our query so that we can resize the image based on our requirements (This is needed as when we would display this data in Power BI dashboard then we can avoid potential memory errors because of the size of the row/column). Also, it will then convert image to JPEG Base 64 (which helps to load files quickly).

In below screenshot, we can change the values in row 11 (marked with a red arrow) to resize image as per our requirements.

Next, read the Image Files as binarFiles and extract column: Path, Content and use pass Content column to UDF and convert to Binary format and then use this convert to JPEG base64.

Now at this stage we have 2 dataframes, 1 with data and 2nd with image related properties. Now we need to combine both of these datasets so that we can create one single record for both data and image properties.

In below screenshot, we are joining both of the dataframes and while doing so we are comparing it with the Image File Name from Location field from Dataset and Path filed from image dataframe. Post joining these 2 dataframes, we are saving it as a new dataframe which we then we write as a delta table.

A display of table will show data and image metadata nicely stitched for each row and while reading we need not to use any image format either. Data in the table is stored in a format which when displayed rendered as image.

Query Data in Databricks SQL

Let’s query this table in Databrick SQL. And yes, we are able to see image data as well as part of SELECT statement.

Render Data in Power BI

Let’s render this table in Power BI. We need change the Data Category to Image URL for resized_image_base64 column and we will be able to see image column rendered properly.

Git Code Repository :